DuckDB-Powered Postgres

Announcing pg_duckdb, embedding DuckDB’s columnar-vectorized analytics engine and features into Postgres.

While once considered nice-to-have features for an application database, analytics now represent a competitive advantage and drives better customer experiences across enterprise and consumer applications. Developers across every industry are increasingly embedding powerful insights directly into their applications. We’ve observed the rise of data-intensive apps that require both greater access to disparate data sources and sub-second response times.

Most new applications today are powered by Postgres and it’s no surprise. In the 2024 Stack Overflow Developer Survey, Postgres ranked #1 for the most desired and #1 for the most admired database on earth of the 51,992 responses. Postgres, for all intents and purposes, is the application database of 2024 powering 10s of thousands of apps. While Postgres has many strengths, it’s analytics limitations can’t reasonably serve the new generation of data-intensive apps that place emphasis on analytics and interactivity.

There are many old and some new ways to tweak analytics performance on Postgres; most quick fixes don’t work well and scale poorly when requirements shift and complexity grows. Modern developers should have access to a state-of-the-art analytics engine within their application database.

A simple, low-cost, open source, ducky architecture

We’re happy to announce pg_duckdb, an open source (MIT licensed) project that embeds DuckDB’s columnar-vectorized analytics engine and features into Postgres. Built in collaboration with the DuckDB Foundation and MotherDuck, pg_duckdb is for developing high performance applications and analytics.

pg_duckdb adds to Postgres:

- Access to broader dataset in Object Storage

- State-of-the-art analytics performance

- DuckDB features, such as COPY TO parquet format

DuckDB abilities meet Postgres

If you’d like to read the full list of features, check out the pg_duckdb README

- SELECT queries executed by the DuckDB engine can directly read Postgres tables. pg_duckdb can read data types that exist in both Postgres and DuckDB. The following data types are supported: numeric, character, binary, date/time, boolean, uuid, json, and arrays. If DuckDB cannot support the query for any reason, execution falls back to Postgres.

- Read parquet and CSV files from object storage (AWS S3, Cloudflare R2, or Google GCS).

SELECT n FROM read_parquet('s3://bucket/file.parquet') AS (n int)SELECT n FROM read_csv('s3://bucket/file.csv') AS (n int) - Enable the DuckDB Iceberg extension to read Iceberg files with iceberg_scan:

SELECT duckdb.enable_extension('iceberg') - Write a query — or an entire table — to parquet in object storage.

COPY (SELECT foo, bar FROM baz) TO 's3://...'COPY table TO 's3://...' - Read and write to Parquet format in a single query

- Query and JOIN data in object storage with Postgres tables, views, and materialized views.

- Create indexes on Postgres tables to accelerate your DuckDB queries

Getting Started

The best way to get started is to connect Postgres to a new or existing object storage bucket (AWS S3, Cloudflare R2, or Google GCS) with pg_duckdb. You can query data in Parquet, CSV, and Iceberg format using read_parquet, read_csv, and iceberg_scan respectively.

1. Add a credential to enable DuckDB's httpfs support.

INSERT INTO duckdb.secrets(cloud_type, cloud_id, cloud_secret, cloud_region)

VALUES ('S3', 'access_key_id', 'secret_accss_key', 'us-east-1');2. Copy data directly to your bucket - no ETL pipeline!

COPY (SELECT user_id, item_id, price, purchased_at FROM purchases)

TO 's3://your-bucket/purchases.parquet';3. Perform analytics on your data.

SELECT SUM(price) AS total, item_id

FROM read_parquet('s3://your-bucket/purchases.parquet')

AS (price float, item_id int)

GROUP BY item_id

ORDER BY total DESC

LIMIT 100;Open Source Supporters

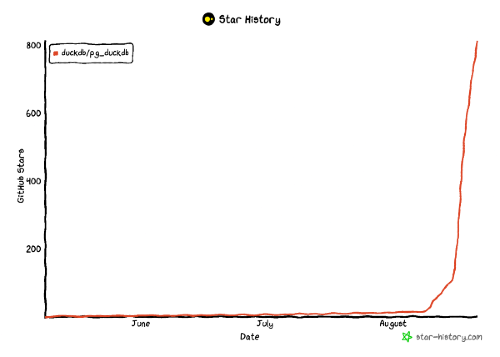

We’ve been overwhelmed by positive comments and support from the open source community. After announcing pg_duckdb at DuckCon on Thursday, our GitHub repo trended over the weekend!

Our team would like to offer a few special words of gratitude to the following supporters, many of whom are providing financial backing to the development of pg_duckdb:

Ovais Tariq of Tigris Data, the Globally Distributed S3-Compatible Object Store

Tom Hacohen of Svix, Webhooks as a Service

Owain Brennan of SeerBI, Data Science / ML lab

As well as the teams from Infisical, Mintlify, Dagster, ElectricSQL, Nextgres, GoldSky, Drizzle, SuperTokens, TableFlow, QuestDB, PostgresML, NeoSync, Firecrawl, Positional and more.

If you’d like to support the open source development of pg_duckdb, please head over to hydra.so and click the “Become a Supporter” button to learn more.

Looking Forward: MotherDuck

The pg_duckdb extension will be fully capable of querying against data stored in the cloud in MotherDuck as if it were local. MotherDuck's "dual execution" capabilities let us join local Postgres data against MotherDuck data seamlessly, and we will figure out the best place to run the query. As a user, you don't really need to care where the computation runs, we'll just figure out how to make it run fast.

Moreover, it is common in analytics to want to offload your data from your transactional database into an analytical store. The pg_duckdb extension along with MotherDuck can help; you can just run a query in Postgres that pulls recent data from your Postgres database and write it to MotherDuck. You don't need to export and reimport data, or set up CDC.

Analytics can be resource hungry in terms of the amount of memory and CPU needed to make it run well. Depending on use-case requirements, teams may prefer using MotherDuck to help offload analytics to the cloud. Ideally, developers won't even have to change the queries that they're running; they just get faster.

Head over to MotherDuck’s post “Splicing Duck and Elephant DNA” to learn more about the coming MotherDuck integration.